3D ShapeNets: A Deep Representation for Volumetric Shapes

Abstract

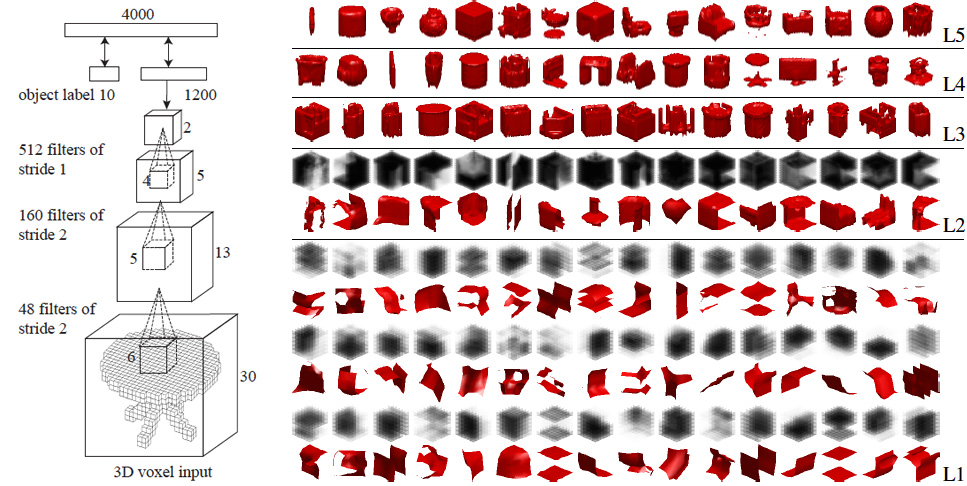

3D shape is a crucial but heavily underutilized cue in object recognition, mostly due to the lack of a good generic shape representation. With the recent boost of inexpensive 2.5D depth sensors (e.g. Microsoft Kinect), it is even more urgent to have a useful 3D shape model in an object recognition pipeline. Furthermore, when the recognition has low confidence, it is important to have a fail-safe mode for object recognition systems to intelligently choose the best view to obtain extra observation from another viewpoint, in order to reduce the uncertainty as much as possible. To this end, we propose to represent a geometric 3D shape as a probability distribution of binary variables on a 3D voxel grid, using a Convolutional Deep Belief Network. Our model naturally supports object recognition from 2.5D depth map, and view planning for object recognition. We construct a large-scale 3D computer graphics dataset to train our model, and conduct extensive experiments to study this new representation.

Paper

-

Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang and J. Xiao

3D ShapeNets: A Deep Representation for Volumetric Shape Modeling

Proceedings of 28th IEEE Conference on Computer Vision and Pattern Recognition (CVPR2015)

Oral Presentation

Supplementary Materials

Data

- ModelNet10.zip: this file contains the 10 categories of CAD models used to train our deep network. Training and testing split is available.

- ModelNet40.zip: this file contains the 40 categories of CAD models used to train our deep network. Training and testing split is available.

- Full Dataset: Please email Shuran Song.

ModelNet Benchmark Leaderboard

Please check here .

Presentation

|

Acknowledgement

This work is supported by gift funds from Intel Corporation and Project X grant to the Princeton Vision Group, and a hardware donation from NVIDIA Corporation. Z.W. is also partially supported by Hong Kong RGC Fellowship. We thank Thomas Funkhouser, Derek Hoiem, Alexei A. Efros, Andrew Owens, Antonio Torralba, Siddhartha Chaudhuri, and Szymon Rusinkiewicz for valuable discussion.